Introduction

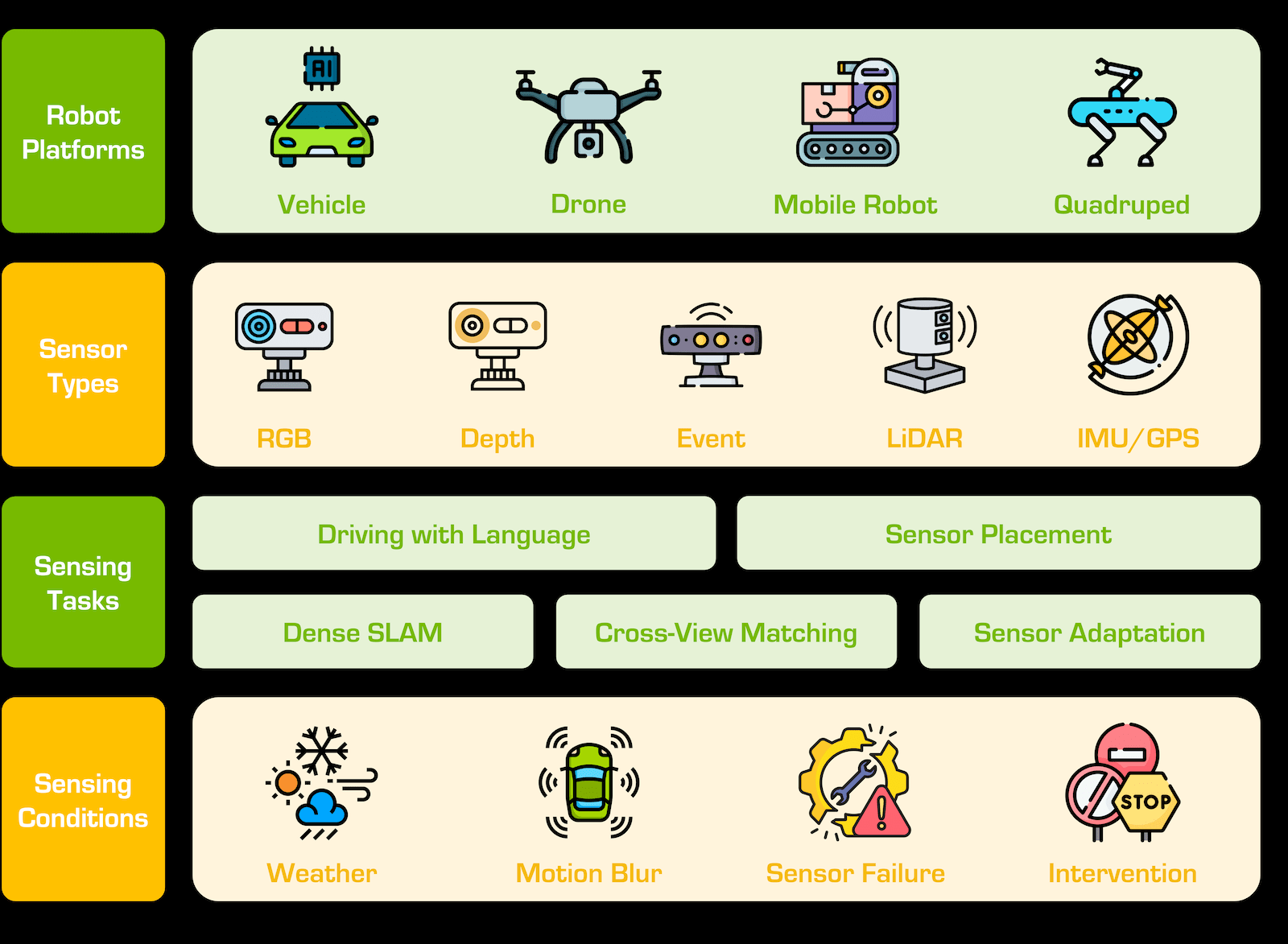

The RoboSense Challenge aims to advance the state of robust robot sensing across diverse robot platforms, sensor types, and challenging sensing environments.

Five distinct tracks are designed to push the boundaries of resilience in robot sensing systems, covering critical tasks such as driving with language, social navigation, sensor placement optimization, cross-view image retrieval, and robust 3D object detection. Each track challenges participants to address real-world conditions such as platform discrepancies, sensor failures, environmental noises, and adverse weather conditions, ensuring that robot sensing models remain accurate and reliable under real-world scenarios.

The competition provides participants with datasets and baseline models relevant to each track, facilitating the development of novel algorithms that improve robot sensing performance across both standard and out-of-distribution scenarios. A focus on robustness, accuracy, and adaptability ensures that models developed in this challenge can generalize effectively across different conditions and sensor configurations, making them applicable to a wide range of autonomous systems, including vehicles, drones, and quadrupeds.

Challenge Tracks

There are five tracks in the RoboSense Challenge, with emphasis on the following robust robot sensing topics:

- Track #1: Driving with Language.

- Track #2: Social Navigation.

- Track #3: Cross-Sensor Placement 3D Object Detection.

- Track #4: Cross-Modal Drone Navigation.

- Track #5: Cross-Platform 3D Object Detection.

For additional implementation details, kindly refer to our DriveBench, Falcon, Place3D, GeoText-1652, and Pi3DET projects.

E-mail: robosense2025@gmail.com.

Venue

The RoboSense Challenge is affiliated with the 2025 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2025).

IROS is IEEE Robotics and Automation Society's flagship conference. IROS 2025 will be held from October 19th to 25th, 2025, in Hangzhou, China.

The IROS competitions provide a unique venue for state-of-the-art technical demonstrations from research labs throughout academia and industry. For additional details, kindly refer to the IROS 2025 website.

Contact

Timeline

-

Team Up

Register for your team by filling in this Google Form.

-

Release of Training and Evaluation Data

Download the data from the competition toolkit.

-

Competition Servers Online @ CodaBench

-

Phase One Deadline

Shortlisted teams are invited to participate in the next phase.

-

Phase Two Deadline

Don't forget to include the code link in your submissions.

-

Award Decision Announcement

Associated with the IROS 2025 conference formality.

Awards

1st Place

Cash $ 5000 + Certificate

- This award will be given to five awardees; an amount of $ 1000 will be given to each track.

2nd Place

Cash $ 3000 + Certificate

- This award will be given to five awardees; an amount of $ 600 will be given to each track.

3rd Place

Cash $ 2000 + Certificate

- This award will be given to five awardees; an amount of $ 400 will be given to each track.

Innovative Award

Certificate

- This award will be selected by the program committee and given to ten awardees; two per track.

Toolkit

Competition Tracks

Track #1: Driving with Language

This track challenges participants to develop vision-language models that enhance the robustness of autonomous driving systems under real-world conditions, including sensor corruptions and environmental noises.

Participants are expected to design multimodal language models that fuse driving perception, prediction, and planning with natural language understanding, enabling the vehicle to make accurate, human-like decisions under all kinds of real-world sensing conditions.

Kindly refer to this page for more technical details on this track.

Track Organizers

Track #2: Social Navigation

This track challenges participants to develop RGBD-based perception and navigation systems that enable autonomous agents to interact safely, efficiently, and socially in dynamic human environments.

Participants will design algorithms that interpret human behaviors and contextual cues. Submissions should generate navigation strategies balancing efficiency and social compliance, while addressing challenges like real-time adaptability, occlusion handling, and ethical decision-making.

Kindly refer to this page for more technical details on this track.

Track Organizers

Track #3: Cross-Sensor Placement 3D Object Detection

This track challenges participants to design LiDAR-based 3D perception models, including those for 3D object detection and LiDAR semantic segmentation, that can adapt to diverse sensor placements in autonomous systems.

Participants will be tasked with developing novel algorithms that can adapt to and optimize LiDAR sensor placements, ensuring high-quality 3D scene understanding across a wide range of environmental conditions, such as weather variances, motion disturbances, and sensor failures.

Kindly refer to this page for more technical details on this track.

Track Organizers

Track #4: Cross-Modal Drone Navigation

This track aims at the development of models for natural language-guided cross-view image retrieval, specifically for scenarios where input data is captured from drastically different viewpoints, such as aerial (drone or satellite) and ground-level images.

Participants are tasked with designing models that can effectively retrieve corresponding images from large-scale cross-view image databases based on natural language text descriptions, even under the presence of common corruptions such as blurriness, occlusions, or sensory noises.

Kindly refer to this page for more technical details on this track.

Track Organizers

Track #5: Cross-Platform 3D Object Detection

This track focuses on the development of robust 3D object detectors that can seamlessly adapt across different robot platforms, including vehicles, drones, and quadrupeds.

Participants are expected to develop new adaptation algorithms that can effectively adapt 3D perception tasks, specifically object detection, across three robot platforms that use different sensor configurations and movement dynamics. The models are expected to be trained using vehicle data, and achieve promising performance on drone and quadruped platforms.

Kindly refer to this page for more technical details on this track.

Track Organizers

Evaluation Servers

FAQs

Please refer to Frequently Asked Questions for more detailed rules and conditions of this competition.

Organizing Team

Challenge Organizers

Program Committee

Industry Mentors

Associated Project

This project is affiliated with DesCartes, a CNRS@CREATE program on Intelligent Modeling for Decision-Making in Critical Urban Systems.

Terms & Conditions

This competition is made freely available to academic and non-academic entities for non-commercial purposes such as academic research, teaching, scientific publications, or personal experimentation. Permission is granted to use the data given that you agree:

1. That the data in this competition comes “AS IS”, without express or implied warranty. Although every effort has been made to ensure accuracy, we do not accept any responsibility for errors or omissions.

2. That you may not use the data in this competition or any derivative work for commercial purposes as, for example, licensing or selling the data, or using the data with a purpose to procure a commercial gain.

3. That you include a reference to RoboSense (including the benchmark data and the specially generated data for academic challenges) in any work that makes use of the benchmark. For research papers, please cite our preferred publications as listed on our webpage.

To ensure a fair comparison among all participants, we require:

1. All participants must follow the exact same data configuration when training and evaluating their algorithms. Please do not use any public or private datasets other than those specified for model training.

2. The theme of this competition is to probe the out-of-distribution robustness of autonomous driving perception models. Theorefore, any use of the corruption and sensor failure types designed in this benchmark is strictly prohibited, including any atomic operation that is comprising any one of the mentioned corruptions.

3. To ensure the above two rules are followed, each participant is requested to submit the code with reproducible results before the final result is announced; the code is for examination purposes only and we will manually verify the training and evaluation of each participant's model.