👋 Welcome to Track #5: Cross-Platform 3D Object Detection of the 2025 RoboSense Challenge!

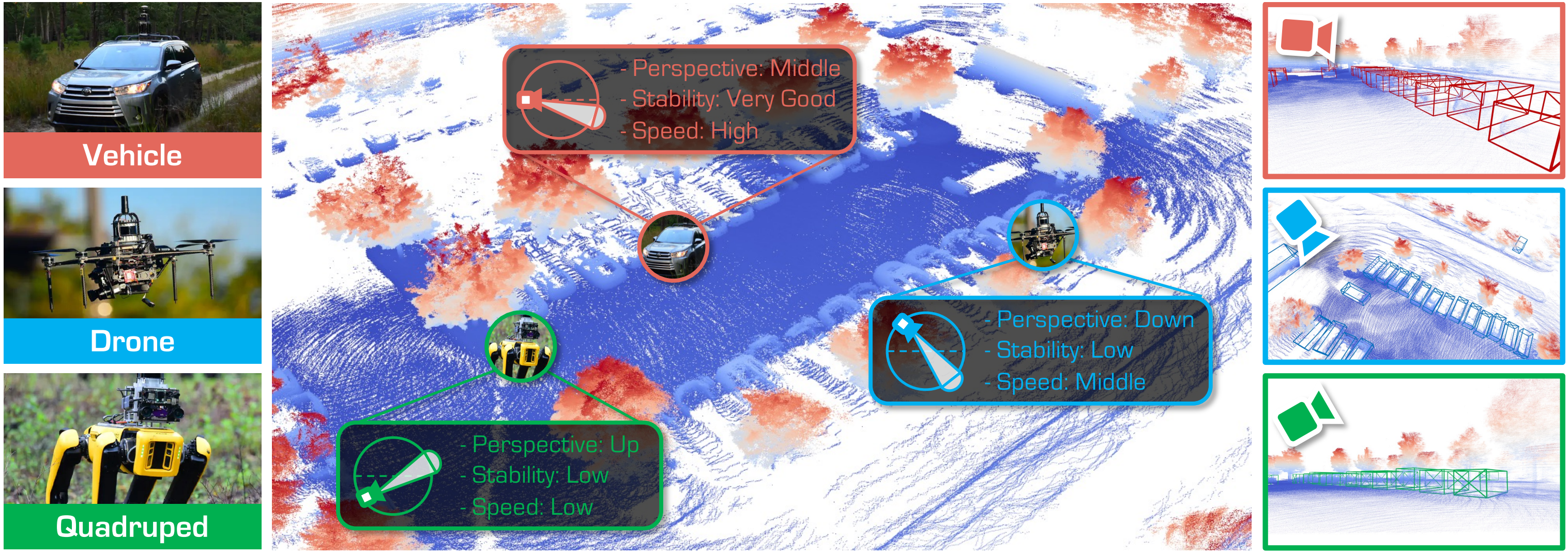

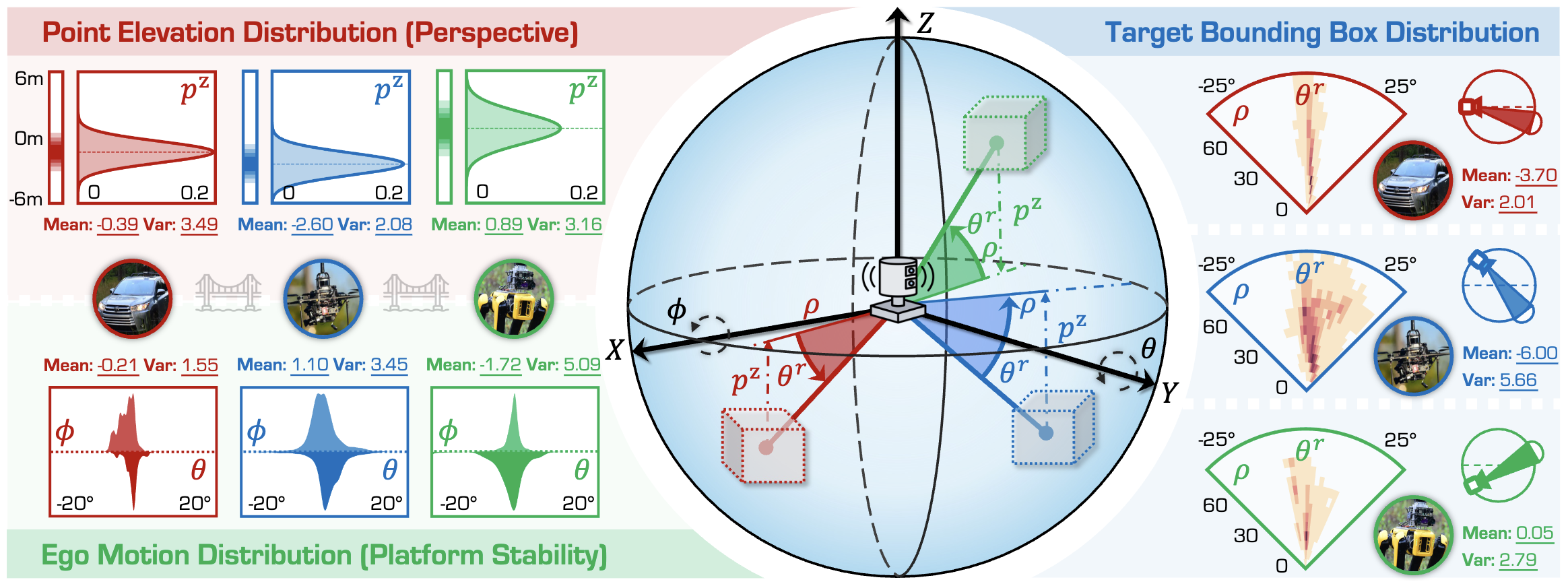

With the rise of robotics, LiDAR-based 3D object detection has garnered significant attention in both academia and industry. However, existing datasets and methods predominantly focus on vehicle-mounted platforms, leaving other autonomous platforms underexplored.

This track encourages participants to develop novel cross-platform adaptation framework that transfers knowledge from the well-studied vehicle platform to other platforms, including drones and quadruped robots.

🏆 Prize Pool: $2,000 USD (1st: $1,000, 2nd: $600, 3rd: $400) + Innovation Awards

🎯 Objective

As robotics continues to advance, LiDAR-based 3D object detection has become a focal point in both academia and industry. However, most existing datasets and methods target vehicle platforms, overlooking quadrupeds and drones. This challenge, built on our benchmark, aims to:

- Build on three robot platforms - vehicles, drones, and quadruped robots - to foster innovations in a unified LiDAR-based 3D object detection framework;

- Bridge geometric and data distribution disparities to achieve rapid knowledge transfer and model adaptation across different robot platforms;

- Lower annotation and deployment overhead, supporting collaborative sensing for heterogeneous robot teams in urban, disaster, and indoor scenarios.

🗂️ Phases & Requirements

Phase #1: Adaptation from Vehicle → Drone

Duration: June 15th, 2025 (anytime on earth) - August 15th, 2025 (anytime on earth)

Settings:

- Source platform: LiDAR scans with 3D bounding-box annotations from the Vehicle platform

- Target platform: Unlabeled LiDAR scans from the Drone platform

Ranking Metric: AP@0.50 (R40) for the Car class evaluated on Drone data.

Phase #2: Adaptation from Vehicle → Quadruped

Duration: August 15th, 2025 (anytime on earth) - September 15th, 2025 (anytime on earth)

Settings:

- Source platform: LiDAR scans with 3D bounding-box annotations from the Vehicle platform

- Target platforms: Unlabeled LiDAR scans from the Quadruped platform

Ranking Metric: A weighted score combining:

- AP@0.50 (R40) for the Car class

- AP@0.50 (R40) for the Pedestrian class

Note: Scores computed on Quadruped platforms.

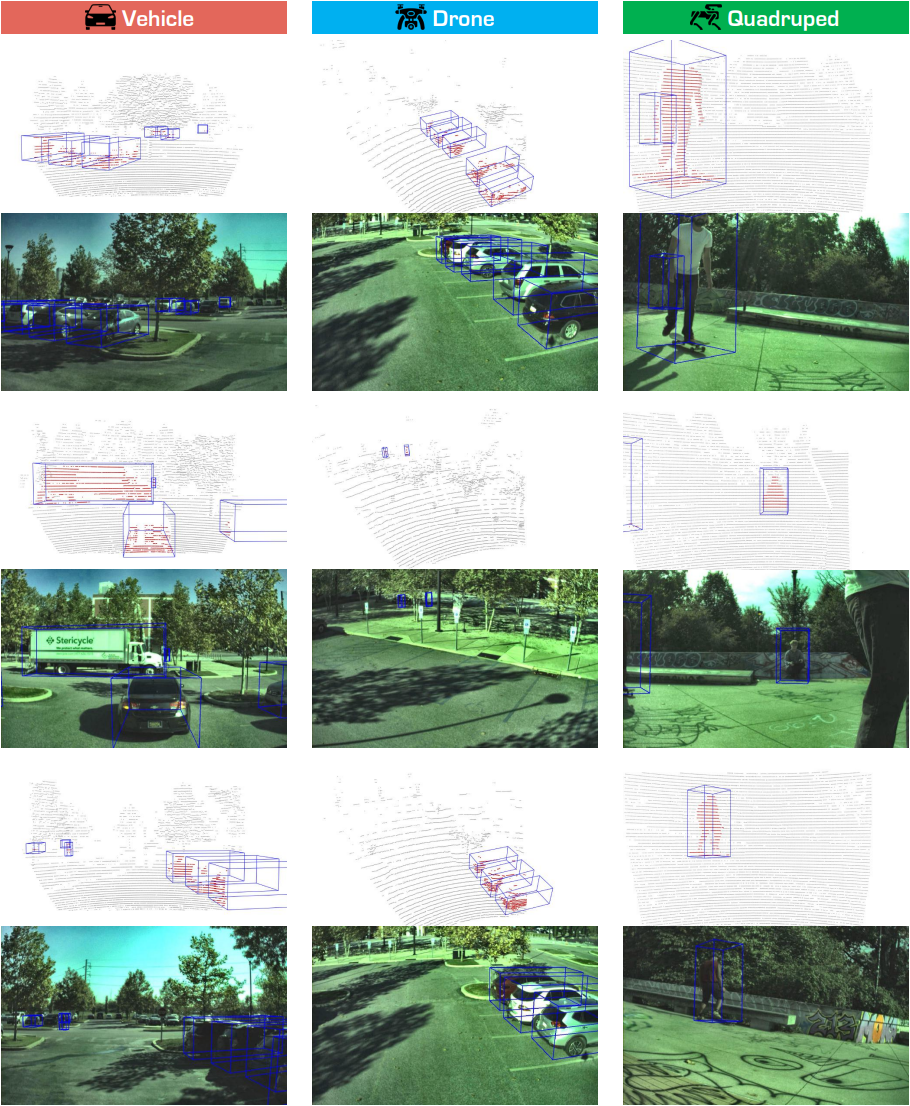

🚗 Dataset Examples

🛠️ Baseline Model

In this track, we adopt PV-RCNN as the base 3D object detector, and leverage ST3D/++ as our baseline adaptation framework. Detailed environment setup and experimental protocols can be found in the Track5 GitHub repository .

Beyond the provided baseline, participants are encouraged to explore alternative strategies to further boost cross-platform performance:

- Treat the cross-platform challenge as a domain adaptation problem by improving pseudo-label quality and fine-tuning on target-platform data.

- Design novel data augmentation techniques to bridge geometric and feature discrepancies across platforms.

- Adopt geometry-agnostic 3D detectors, such as point-based architectures, that are less sensitive to platform-specific point-cloud characteristics.

📊 Baseline Results

Phase 1 Results

| Metric | Car BEV AP0.7@40 | Car 3D AP0.7@40 | Car BEV AP0.5@40 | Car 3D AP0.5@40 |

|---|---|---|---|---|

| PVRCNN-Source | 34.60 | 16.31 | 40.67 | 33.70 |

| PVRCNN-ST3D | 47.81 | 26.03 | 53.40 | 46.64 |

| PVRCNN-ST3D++ | 45.96 | 25.37 | 52.65 | 45.07 |

Phase 2 Results

| Metric | Car BEV AP0.5@40 | Car 3D AP0.5@40 | Ped. BEV AP0.5@40 | Ped. 3D AP0.5@40 |

|---|---|---|---|---|

| PVRCNN-Source | 26.86 | 22.24 | 42.29 | 37.54 |

| PVRCNN-ST3D | 34.60 | 28.97 | 48.68 | 43.51 |

| PVRCNN-ST3D++ | 32.76 | 28.53 | 46.99 | 41.49 |

🔗 Resources

We provide the following resources to support the development of models in this track:

| Resource | Link | Description |

|---|---|---|

| GitHub Repository | github.com/robosense2025/track5 | Baseline code and setup instructions |

| HuggingFace Dataset | Huggingface Dataset | Dataset with training and test splits |

| Baseline Model | Pre-Trained Checkpoint | Weights of the baseline model |

| Registration Form | Google Form (Closed on August 15th) | Team registration for the challenge |

| Evaluation Server | CodaBench Platform | Online evaluation platform |

❓ Frequently Asked Questions

Here, we provide a list of Frequently Asked Questions (FAQs) below for better clarity. If you have additional questions on the details about this competition, please reach out at robosense2025@gmail.com.

Question 1

Answer 1

Question 2

Answer 2

Question 3

Answer 3

📖 References

@misc{robosense2025track5,

title = {RoboSense Challenge 2025: Track 5 - Cross-Platform 3D Object Detection},

author = {RoboSense Challenge 2025 Organizers},

year = {2025},

howpublished = {https://robosense2025.github.io/track5}

}