👋 Welcome to Track #3: Cross-Sensor Placement 3D Object Detection of the 2025 RoboSense Challenge!

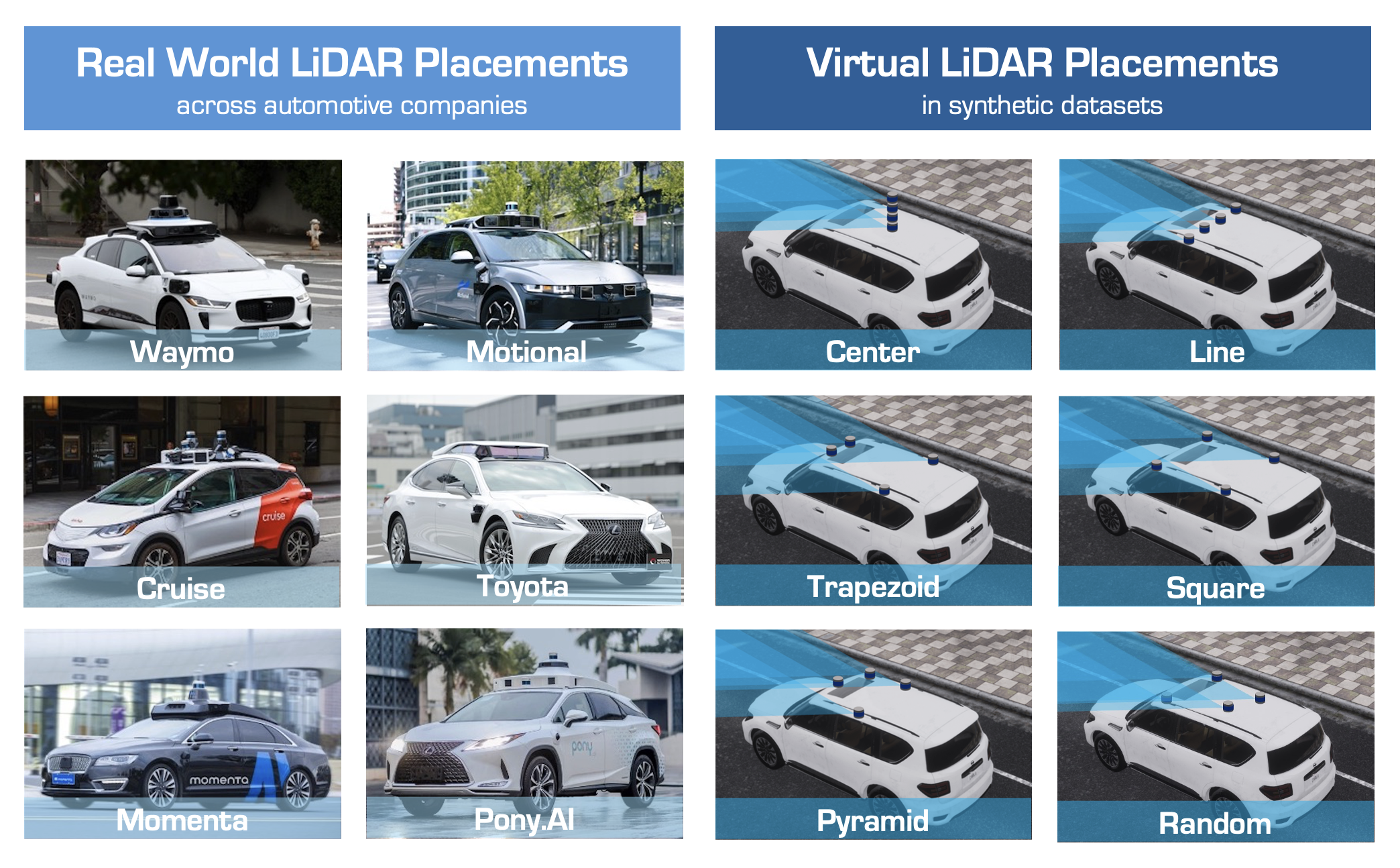

Autonomous driving models typically rely on well-calibrated sensor setups, and even slight deviations in sensor placements across different vehicles or platforms can significantly degrade performance. This lack of robustness to sensor variability makes it challenging to transfer perception models between different vehicle platforms without extensive retraining or manual adjustment.

Therefore, achieving generalization across sensor placements is essential for the practical deployment of the driving-based 3D object detection models.

🏆 Prize Pool: $2,000 USD (1st: $1,000, 2nd: $600, 3rd: $400) + Innovation Awards

🎯 Objective

This track evaluates the capability of generalizing perception models across sensor placements. Participants are expected to develop models that, when trained on fixed sensor placements, can generalize to diverse configurations with minimal performance degradation.

🗂️ Phases & Requirements

Phase #1: Development & Validation

Duration: June 15th, 2025 (Anywhere on Earth) - August 15th, 2025 (Anywhere on Earth)

In this phase, participants are provided with the training and validation datasets which include diverse LiDAR placements. The ground truth annotations are made available to support method development and performance validation.

Participants are expected to use these datasets to design, train, and refine their models.

Phase #2: Testing & Submission

Duration: August 15th, 2025 (Anywhere on Earth) - September 15th, 2025 (Anywhere on Earth)

In the second phase, participants will have access to a test dataset with LiDAR placements different from those in the training and validation sets. Ground truth annotations are not provided.

The participants are expected to run their developed models on the test set and submit the results in the json format. Model performance will be evaluated based on the submitted outputs.

The CodaBench evaluation server is open at: https://www.codabench.org/competitions/9284

🚗 Dataset

This track uses datasets collected in the CARLA simulator and formatted according to the nuScenes data structure. Please use the provided development kit to process the datasets.

This toolkit is based on the nuscenes-devkit but has been customized for this track.

It can be used in the same way as the official nuScenes dataset.

Do not install the official nuscenes-devkit, as it might not be fully compatible with the datasets used in this track.

🛠️ Baseline Model

In this track, BEVFusion-L is designated as the baseline method. Participants may choose to build upon the baseline or develop their own approaches independently. Detailed environment setup and experimental protocols can be found in the Track3 GitHub repository.

📊 Baseline Results

In this track, we use ...

| Method | mAP | mATE | mASE | mAOE | mAVE | mAAE | NDS |

|---|---|---|---|---|---|---|---|

| BEVFusion-L | 0.6051 | 0.1210 | 0.1235 | 1.1638 | 2.2521 | 0.3983 | 0.5383 |

🔗 Resources

We provide the following resources to support the development of models in this track:

| Resource | Link | Description |

|---|---|---|

| GitHub Repository | github.com/robosense2025/track3 | Baseline code and setup instructions |

| HuggingFace Dataset | HuggingFace Dataset | Dataset with training and test splits |

| Checkpoint | Pre-Trained Model | Weights of the baseline model |

| Registration | Google Form (Closed on August 15th) | Team registration for the challenge |

| Submission Portal | CodaBench Platform | Online evaluation platform |

❓ Frequently Asked Questions

Here, we provide a list of Frequently Asked Questions (FAQs) below for better clarity. If you have additional questions on the details about this competition, please reach out at robosense2025@gmail.com.

Can we use multi-modal (e.g., camera–LiDAR fusion) methods?

No. Only LiDAR data may be used for model training and evaluation. Camera data is provided solely for visualization and must not be used for either training or evaluation.

Can we use external data for pre-training, or employ pre-trained models?

No. You may only use the data provided by the competition for model training.

How are competition scores ranked?

Rankings are determined primarily by mAP. If the difference in mAP between submissions is within 0.01 (1%), then NDS will be used as a secondary criterion to break ties.

📖 References

@misc{robosense2025track3,

title = {RoboSense Challenge 2025: Track 3 - Sensor Placement},

author = {RoboSense Challenge 2025 Organizers},

year = {2025},

howpublished = {https://robosense2025.github.io/track3}

}